The world of hiring, you know, recruitment, is genuinely going through a big change right now. Things like everyone working remotely more often, the whole globalization aspect, and just, well, the sheer number of people applying for jobs these days mean that those old-school, in-person interviews and tests? They’re being swapped out for online options a lot more.

This move online is pretty cool, offering huge benefits like being able to reach so many more people, no matter where they live. It really opens up the talent pool, doesn’t it? But okay, here’s the catch, the main challenge really: how do you really trust the results? How can recruiters be sure the person taking the test is actually who they say they are? And that they’re doing it all on their own, without someone whispering answers or looking stuff up?

This question is honestly critical. The validity of those assessment results directly impacts who you hire, and ultimately, how good your new team members are. If you don’t have strong ways to keep things honest, this whole shift to online testing could sort of, well, undermine the entire point of doing the assessment in the first place. I mean, if you can’t trust the scores, what good are they? Industry reports I’ve seen lately certainly seem to back this up; they point out that keeping assessments secure and preventing cheating are major headaches for companies using online tests pretty much everywhere. So, yeah, finding effective solutions that are fair to everyone playing by the rules, while also being tough on malpractice, is pretty urgent.

Keeping candidate assessments fair and honest is absolutely key. It ensures the assessment actually shows a candidate’s real skills and what they know, providing a fair shot for everyone applying. In a remote setting, where you don’t have someone right there watching, maintaining that honesty gets a bit complicated. Traditional ways of doing things, like having someone manually proctor the test, just aren’t really built for scale, cost, or even consistency when you’re talking about large-scale online assessments. It’s just not practical. check for more.

This is, perhaps, where modern tech solutions become really necessary. AI proctoring, for instance, has kind of popped up as this powerful tool designed specifically to tackle these exact problems. It offers, I think, a pretty sophisticated way to keep an eye on online assessments. The goal is to spot and discourage dishonest stuff, all while trying to make sure the experience is standardized and fair for everyone taking the test. Companies like HireOquick are platforms that seem committed to using this kind of technology to offer secure and equitable assessment experiences for candidates.

The Shifting Landscape of Candidate Assessments

So, the whole assessment landscape has really shifted quite dramatically. Organizations are definitely moving away from relying only on those older, in-person testing methods. Doing assessments online? Well, the convenience and efficiency of it have just made them a staple in how companies hire now. This change is driven, largely, by the need to test a bigger pool of candidates, often scattered all over the place geographically. Online assessments are flexible, letting candidates take them from their own homes, on their own schedules, which honestly broadens where you can find talent significantly.

But, as we touched on, this move online comes with challenges, particularly around integrity. Making sure the right person is taking the assessment and that they’re doing it under controlled conditions without someone physically present is just plain hard. Traditional methods, like having a human proctor actually there, simply aren’t workable for huge numbers of online assessments. There’s this real need for trustworthy ways to guarantee both fairness and security. Fairness, obviously, means everyone gets an equal chance based on what they know and can do. Security is about stopping cheating and keeping the results valid. AI proctoring kind of presents a technological answer, I guess, to bridge that gap, providing automated monitoring for online tests.

What Exactly is AI Proctoring for Candidate Assessments?

AI proctoring, in the context of hiring, is basically using artificial intelligence and machine learning technology to watch candidates while they’re taking online assessments. Its main job is to make sure the testing process is honest and that it’s really you taking the test. It’s not just simple surveillance, though; it’s more about creating a controlled environment, but from a distance. The technology watches what the candidate is doing and what’s happening right around them during the whole assessment session.

Typically, the main things it does involve keeping an eye on the video feed from the candidate’s webcam, listening to audio from their microphone, and tracking what they’re doing on their computer screen. The system analyzes all this data as it happens to find patterns or actions that might signal potential cheating or breaking the rules. This includes things like spotting other people nearby, finding stuff you’re not supposed to have (like a phone), noticing suspicious movements, or seeing if you try to open applications you shouldn’t be using. AI proctoring moves beyond the limits human proctors sometimes face by offering consistency, scalability, and just, like, continuous, objective observation.

The Foundational Technology Behind AI Proctoring

Okay, so the reason AI proctoring works at all is because of some pretty smart technology all working together. These technologies process a lot of data collected during an assessment. They’re designed, really, to spot when someone does something different from what’s expected. Understanding what’s going on under the hood kind of helps explain how AI proctoring manages to maintain integrity.

Computer Vision

Computer vision is a really fundamental part of AI proctoring. It’s what allows the system to “see” and figure out what’s in the video coming from the candidate’s webcam feed. This is super important for watching both the candidate and their environment.

Facial Detection & Recognition

First off, this tech finds the faces in the video. Then, facial recognition can maybe compare that face to a picture of the candidate they verified earlier to double-check identity. This helps stop someone from pretending to be someone else, ensuring it’s the right person taking the test.

Eye Tracking & Gaze Analysis

The AI can actually monitor a candidate’s eye movements and where they’re looking. Now, this isn’t definitive proof of cheating, of course, but strange or really long periods of looking away from the screen, or glancing to the side often, can be flagged for someone to review later. It helps spot potential attempts to peek at other things or get help.

Object Detection

The system can be trained to identify specific items in the camera’s view. This includes spotting things that aren’t allowed, like cell phones, unauthorized notes, or other electronic gadgets. If it sees something like that near the candidate during the assessment, it can trigger an alert.

Multi-Person Detection

The AI can also look at the video feed to see if more than one person is in the room where the assessment is happening. This helps make sure the candidate is alone and isn’t getting physical help from someone else while they’re taking the test.

Audio Analysis

Audio analysis processes the sound picked up by the candidate’s microphone. This helps monitor for unauthorized talking or maybe some suspicious sounds in the background.

Voice Detection

This feature detects if the candidate is talking or if other voices can be heard. While talking isn’t always a problem, hearing speech during parts of the test that are supposed to be silent can get flagged, for instance.

Sound Anomaly Detection

The AI can analyze audio patterns to find unusual noises. This could be sounds suggesting another person is talking, the sound of paper rustling if there shouldn’t be any, or perhaps alerts going off on a second device.

Screen Monitoring

Screen monitoring gives you really useful insight into what the candidate is doing on their computer. It records and looks at the actions they take on the device they’re using for the assessment.

Tracking Application Usage

The system can detect if the candidate opens or switches to applications they aren’t supposed to use during the assessment. This stops them from accessing web browsers, chat programs, or anything else that could be used to cheat.

Clipboard Monitoring

Keeping an eye on clipboard activity helps prevent candidates from copying assessment questions. It also stops them from pasting answers into the assessment from somewhere else.

Screen Recording

Recording the screen, either fully or just parts of it, gives you a visual record of everything the candidate did during the session. This is like a complete audit trail. It lets human reviewers look back later and see the candidate’s screen activity alongside the video and audio feeds.

Data Fusion & Machine Learning

Honestly, the real power of AI proctoring comes from taking all this data from different places and analyzing it together. Machine learning algorithms are really important here for making sense of all that combined data.

Putting together data from the video, audio, and screen gives a much clearer picture of the candidate’s behavior, you know? AI algorithms learn by looking at lots of examples of what normal and what suspicious test-taking looks like. They get pretty good at spotting complex patterns that might suggest someone’s trying to cheat. And this algorithmic detection can even get better over time, maybe learning to recognize new cheating methods as people come up with them.

Ensuring Fairness Through AI Proctoring: A Level Playing Field

Fairness is just, well, it’s a fundamental part of running effective candidate assessments. AI proctoring, when it’s set up right, really helps create that level playing field for absolutely everyone taking the test. It does this through several key ways that promote consistency and objectivity.

Standardization: AI proctoring applies the same set of rules and monitoring parameters to every single candidate. It doesn’t matter where they are, what time zone they’re in, or which human proctor (if any) reviews flagged issues later, the automated system uses the same logic to spot potential problems. This, hopefully, gets rid of some of the inconsistency you might get if different human proctors were applying rules slightly differently.

Reducing Human Bias: Human proctors, whether they mean to or not, could introduce bias based on things like someone’s appearance, background, or just their own subjective view of behavior. AI algorithms, while you have to be careful about bias in the data they’re trained on, are meant to make decisions about flagging things based on objective criteria, derived from patterns of behavior. This algorithmic approach can, I think, minimize subjective judgment during the initial monitoring phase.

Equitable Opportunity: By being good at deterring and detecting cheating, AI proctoring helps ensure that assessment scores actually show what a candidate can do and knows. Honest candidates who’ve put in the work and prepared aren’t at a disadvantage because someone else decided to try and game the system. This helps protect the integrity of their results and makes sure that merit is what really counts.

Clear Expectations: Good AI proctoring solutions are upfront with candidates about how they’ll be monitored. This transparency sets clear expectations for how candidates should behave during the test. Knowing the rules and understanding how the monitoring works can actually reduce anxiety for some candidates and, I think, promotes a sense of fairness, since everyone is subject to the same process that’s been explained clearly.

Consistent Review Processes: When the AI flags something as potentially suspicious, these flags are based on specific data points, like how long someone looked away or which applications they opened. This provides human reviewers with consistent evidence to look at. You can then use standardized review guidelines to evaluate these objective flags, ensuring fairness in how potential issues are looked into and resolved for everyone.

Handling Diverse Environments: Okay, this one has its challenges, but AI systems can be adjusted with different sensitivity settings. This allows organizations to potentially adapt monitoring parameters to account for minor differences in candidates’ home environments, while still keeping the core integrity checks in place. The aim is to try and minimize false flags that might happen because of normal things in a home, while still catching real attempts at cheating, striving for fairness even though everyone’s testing conditions might not be identical.

Enhancing Security and Integrity with AI Proctoring

Beyond just fairness, security is, of course, incredibly important for protecting the assessment process and its results. AI proctoring really steps up the security and integrity of online candidate assessments by adding multiple layers of protection and detection.

Deterrence: Just telling candidates that AI proctoring will be used is actually a pretty strong deterrent. Knowing that this sophisticated technology is watching their behavior and environment makes people who might be considering cheating probably think twice. This preventative aspect is a really big security benefit.

Real-time Detection: AI systems can process data and spot suspicious activity right as it’s happening. This means they can be flagged immediately, and in some setups, this can even trigger a warning to the candidate or maybe even pause the assessment. This quick detection capability can limit how long a potential cheating attempt lasts.

Identity Verification: Using facial detection and recognition, AI proctoring helps confirm that the person taking the assessment is really the candidate who registered for it. This is a crucial step, stopping unauthorized individuals from sitting the test on behalf of the actual candidate.

Preventing Impersonation: More advanced facial analysis can spot inconsistencies or if someone tries to cover up their face. This makes it significantly harder for someone who isn’t the registered candidate to successfully pretend to be them throughout the test session.

Securing Assessment Content: Screen monitoring features make it much harder for candidates to just copy and paste assessment questions or answers. By detecting unauthorized software access, AI proctoring helps ensure that valuable test content doesn’t get leaked or shared during the assessment itself.

Comprehensive Audit Trails: AI proctoring systems usually create really detailed logs and recordings of every assessment session. This includes video, audio, and what happened on the screen. These records serve as, well, basically indisputable evidence, providing concrete proof that can be used to confirm results or thoroughly investigate any flagged issues or suspected cheating after the assessment is over.

Scalable Security: Maintaining a high level of security with traditional human proctoring just becomes logistically tough and expensive when you’re testing thousands of candidates online all at once. AI proctoring, though, scales really easily, applying the same strict security checks to every single session, no matter how many people are testing, ensuring consistent integrity at scale.

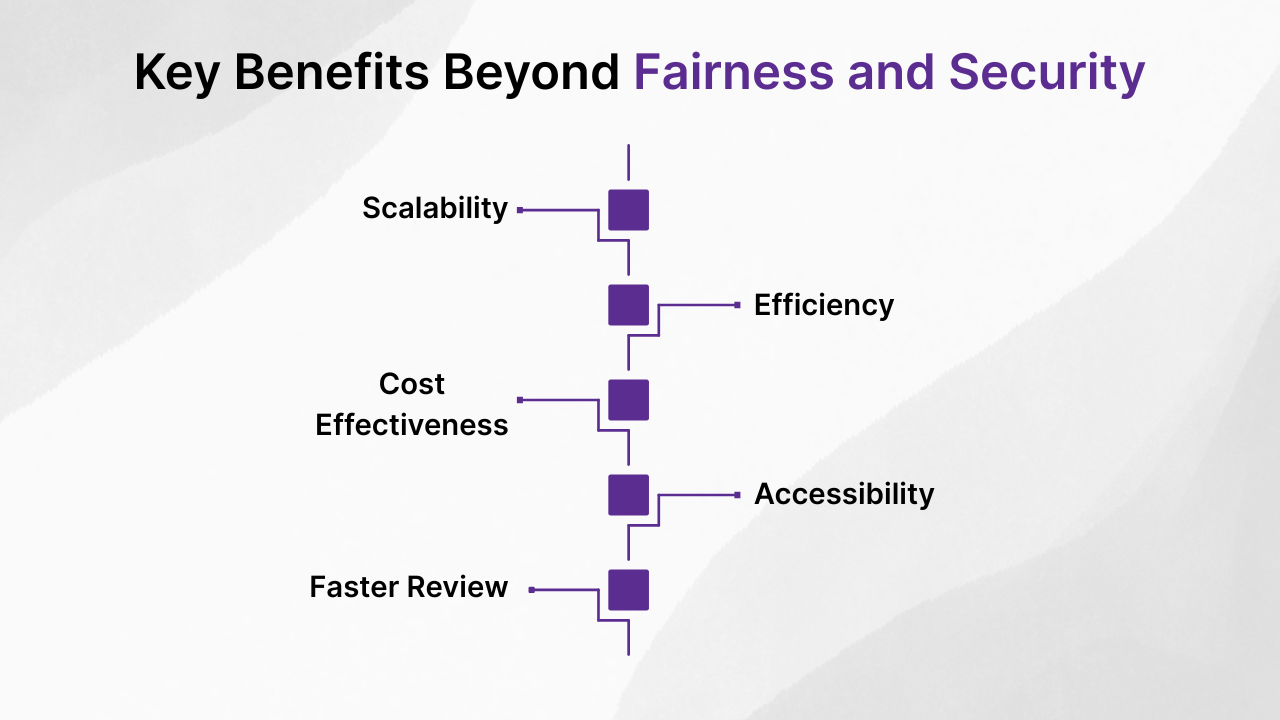

Key Benefits Beyond Fairness and Security

While fairness and security are definitely the main goals, AI proctoring offers a few other really good advantages for companies doing online candidate assessments. These benefits have to do with how efficiently things run, costs, and making tests more accessible.

- Scalability: AI proctoring systems can easily handle a lot of assessments happening at the same time. This makes them perfect for big recruitment pushes, even across the globe. There’s pretty much no limit to how many candidates can be monitored simultaneously, unlike with human proctors.

- Efficiency: Automating the monitoring part really cuts down on the amount of work for HR or recruitment staff. Instead of needing people to watch every candidate for the whole time, staff can focus on reviewing sessions that the AI has flagged, which is just a much more efficient use of their time.

- Cost-Effectiveness: Compared to the money you’d spend setting up physical assessment centers or hiring large teams of live human proctors, AI proctoring is often, I think, a more budget-friendly option, especially if you’re doing lots of tests or testing frequently. It reduces spending on things like logistics, travel, and needing tons of human resources.

- Accessibility: AI proctoring means candidates can take assessments from pretty much anywhere, as long as they have a good internet connection and the right device. This gets rid of geographical barriers and makes it easier for candidates who might have trouble traveling to a physical location. It really helps promote inclusivity in the hiring process.

- Faster Review: Because the AI flags potential issues as they happen, it lets human reviewers quickly find the sessions they need to look at more closely, instead of having to manually watch hours and hours of recordings. This streamlined review process speeds up how quickly you can move candidates through the assessment stage, improving candidate management efficiency.

Addressing Challenges and Concerns in AI Proctoring

Even with all its big advantages, AI proctoring isn’t perfect, and it’s worth thinking carefully about some potential concerns. Talking about these issues openly is really important for getting it right and for candidates to feel okay with it.

Privacy: Collecting data during proctoring involves sensitive personal information, you know, like video, audio, even what’s happening on your computer. Companies have to follow data protection rules like GDPR, CCPA, and others. Best practices definitely include getting clear permission from candidates, being totally upfront about what data is being collected and why, making sure it’s stored and sent securely, and having clear rules about how long you keep the data. Thinking about making data anonymous where possible is also something to consider.

Candidate Acceptance: Some candidates, understandably, might feel a bit weird or anxious about being watched by AI. You can help with this by communicating really clearly and early. Explaining why proctoring is necessary (it’s to make things fair for everyone, after all) and how it works in simple terms helps build trust. Providing resources like FAQs and maybe practice tests can also help people feel more comfortable.

Technical Requirements: Candidates definitely need a good internet connection, a working webcam, a microphone, and a suitable computer. The fact is, not everyone has equal access to this technology, and that can be a challenge. Being really clear about the technical requirements, offering technical support, and maybe even having alternative ways for candidates to be assessed if they just can’t meet the tech requirements are important steps for being inclusive.

Potential for Algorithmic Bias: AI algorithms learn from data, right? So, if that training data isn’t really diverse and representative, the algorithm could, without anyone meaning it to, develop biases. This might mean certain groups of people get flagged unfairly more often just because of things in their environment, how they look, or their accent, if those weren’t properly represented in the data it learned from. Rigorous testing across different groups of people and constantly monitoring it are essential to try and reduce this risk and ensure things are fair.

False Positives: Look, AI systems aren’t perfect. Sometimes they might flag something innocent as suspicious, like maybe someone just looking away for a second to think, or their cat walks into the room. These are called false positives. It’s absolutely critical to have a mandatory step where a human reviewer checks all the sessions that get flagged. Adjusting the sensitivity settings can also help reduce how often false positives happen, while still making sure genuine cheating attempts are caught.

Accessibility & Inclusivity: It’s just crucial to make sure the AI proctoring system is accessible for candidates with disabilities. This might mean making sure it works with assistive technologies or, maybe, offering alternative, equally secure arrangements for candidates who can’t be properly monitored by the standard system due to specific needs. The system really needs to accommodate different circumstances without putting anyone at a disadvantage.

Implementing AI Proctoring Effectively: Best Practices for Candidate Assessments

Implementing AI proctoring well involves more than just buying the technology, you know? Getting it right really depends on careful planning, communicating clearly, and having solid processes in place to make sure it’s fair and works properly. Following best practices really helps you get the most benefits while hopefully avoiding potential problems.

Clear Communication: Seriously, tell candidates about the AI proctoring process proactively and give them plenty of notice before the assessment. Explain why you’re doing it (to ensure fairness and integrity), what data you’re collecting, and how you’ll use and protect it. Make privacy policies easy to find and provide contact info if they have questions. Being transparent is key to building candidate trust, I think.

Detailed Instructions: Give candidates comprehensive instructions, like, about the technical requirements, how they should set up their space (a quiet room, maybe a clear desk), and how they’re expected to behave during the test. Offer guides on how to test their equipment beforehand too. Making it easy for candidates on the technical side reduces stress and, importantly, reduces false flags.

Pilot Testing: Before you launch AI proctoring for a lot of candidates, definitely run internal pilot tests. Use a diverse group of people to try and find and fix any technical glitches, fine-tune how sensitive the system is, and make sure your communication strategy actually works. This helps catch problems before they impact real candidates.

Human Review Protocol: Set up a really clear and consistent way for human reviewers to look at the sessions that the AI flags. Define exactly what counts as a violation and how decisions will be made based on the evidence the system provides. Make sure your reviewers are trained to apply these standards consistently and that they’re aware of potential biases or false positives.

Balancing Monitoring Intensity: You know, not all assessments need the same level of proctoring. You can tailor the monitoring setup based on how important, how difficult, or what kind of assessment it is. Less critical initial screening tests might need less intense monitoring than a high-stakes final evaluation, for example. It’s about balancing security needs with candidate comfort and, frankly, technical load.

Choosing a Reputable Provider: Pick an AI proctoring vendor with a solid track record, really good security infrastructure, and who clearly follows data privacy regulations (like GDPR, maybe ISO certifications). Ask about how accurate their detection is and what their false positive rates are like. Having a reliable partner is just essential for a successful implementation.

Training Your Team: Make absolutely sure your internal staff, the people in HR, recruitment, and IT support, are fully trained on how the AI proctoring system works. They need to understand the technology, how to help candidates with common technical issues, how to interpret flagged sessions according to your review rules, and how to talk about the process effectively with candidates.

Here’s a list of some key things to keep in mind for getting this right:

- Communicate early and be really transparent.

- Provide clear instructions on technical stuff and behavior.

- Do thorough testing internally first.

- Have a definite process for humans to review flagged sessions.

- Adjust how intense the monitoring is based on the test.

- Choose a vendor you can trust on security and compliance.

- Make sure your internal team knows how to use it.

Choosing the Right AI Proctoring Solution for Your Candidate Assessments

Deciding on the right AI proctoring solution? That’s a pretty important decision. The platform you choose should, I think, match up with your organization’s specific assessment needs, your existing tech, and your commitment to both fairness and security. Looking at potential vendors requires a careful look at what they can do and what kind of support they offer.

Figure out which features are just non-negotiable for you. Does the solution have robust ways to check identity? What about its ability to spot screen sharing or devices that aren’t allowed? How does it handle different testing environments people might be in? Prioritize the features that directly support your goals for fairness (like consistent rules and transparent flagging) and security (like deterring cheating, accurate detection, and keeping good records).

Check how well it can integrate with the recruitment tech you’re already using. Connecting smoothly with your Applicant Tracking System (ATS) or current assessment platform is, honestly, vital for making things efficient. Look for APIs or built-in connections. HireOquick, for instance, is designed with seamless integration in mind, built to connect easily with various recruitment tools, which is nice.

Assess the vendor’s support system, what training they offer, and their overall expertise. Do they provide support for candidates having technical problems during assessments, maybe around the clock? What kind of training do they give your own team who will be setting things up and reviewing sessions? A vendor who knows their stuff and is responsive can make a really big difference in getting everything implemented successfully.

Understand the pricing, too. Is it priced per assessment, per candidate, or some kind of subscription? Make sure the pricing scales okay with how many assessments you do and fits within your budget while still giving you the features you need.

Definitely give vendors with strong data security certifications (like ISO 27001, maybe?) and a clear commitment to data privacy compliance (being GDPR ready, for example) high priority. Ask about their policies for storing data, how they encrypt things, and what happens if there’s a data breach. Protecting candidate data is just paramount.

Ask for demos and case studies from potential providers. See the system actually working, including what it’s like for the candidate, what the dashboard for proctors looks like, and how the review process for flagged sessions works. If possible, talk to companies already using them. HireOquick, for example, offers demos specifically to show how their AI proctoring features can help with your candidate assessment needs. Maybe look for solutions that offer more than just proctoring, too, like features for creating assessments using AI, identifying skills, or parsing resumes. That could really streamline your whole hiring process even more.

AI Proctoring vs. Traditional Proctoring Methods: A Comparative Look

It’s helpful, I think, to see where AI proctoring fits in alongside other ways of making sure assessments are honest. Each method has its own good points and bad points.

| Feature | Manual In-Person Proctoring | Live Remote Human Proctoring | Automated AI Proctoring | Hybrid Models | ||

|---|---|---|---|---|---|---|

| Scalability | Low (Limited by space/staff) | Moderate (Limited by how many proctors you have) | High (Can handle huge numbers easily) | High (Combines AI scale with human checks) | ||

| Cost | High (Venue, staff, logistics) | Moderate to High (Proctors cost money by the hour) | Low to Moderate (Maybe a subscription or per use) | Moderate to High (AI cost + human review cost) | ||

| Consistency | Varies a lot (Depends on who the proctor is) | Varies a lot (Depends on who the proctor is) | High (Uses the same rules for everyone) | High (Consistent flagging + structured review) | ||

| Objectivity | Varies (Humans can be subjective) | Varies (Humans can be subjective) | High (Finds patterns based on algorithms) | High (AI flags + objective human review) | ||

| Efficiency | Low (Takes a lot of effort to set up/monitor) | Moderate (Scheduling, watching) | High (Monitoring is automated, review is flagged) | High (AI does the bulk, humans check exceptions) | ||

| Detection Rate | Can be good for really obvious cheating | Can be good for obvious and subtle things | Good for things it’s trained for, maybe less for totally new stuff | Potentially the best (AI finds things, human interprets) | ||

| Privacy Concern | Moderate (Someone is physically there) | Moderate (Video/audio is recorded) | High (Collects a lot of data, needs strong rules) | High (Similar data collection to automated) | ||

| Technical Need | Low (Just need basic stuff for the test) | Moderate (Need webcam, mic, good internet) | High (Might need specific hardware/software, good internet) | High (Similar to Automated AI) |

Manual In-Person Proctoring: This is how it used to be done – candidates taking a test in a physical place with someone watching them directly. Good points: People feel like it’s very secure and fair for clear cheating. Bad points: Doesn’t scale well at all, costs a lot, logistical nightmare, you’re limited by location.

Live Remote Human Proctoring: A human watches candidates in real-time over a webcam and screen share, but they’re somewhere else. Good points: Provides good security and fairness because a person is directly observing. Bad points: Can get expensive per session, scheduling across time zones is tricky, not as scalable as AI, and you still get some variation depending on the person.

Automated AI Proctoring: AI technology monitors the session without a human watching the whole time. The AI flags suspicious things for review later. Good points: Scales really well, can be cheaper, consistent monitoring, flagging is based on data. Bad points: You can get false positives, needs careful setup, some candidates might feel uncomfortable, how well it works depends a lot on how good the technology is.

Hybrid Models: These systems use automated AI monitoring but add a layer of human oversight. The AI flags the suspicious behavior, and then human reviewers look at those specific parts of the session. Good points: Balances the scalability and cost of AI with the ability of humans to make nuanced judgments, potentially offering the best of both worlds. Bad points: Requires training for both the AI and the human reviewers, can be a bit complex to set up.

Figuring out which method is best kind of depends on what you’re trying to achieve with your assessments, how many candidates you have, your budget, how critical the assessment is, and, importantly, what kind of tech access your target candidates generally have. For doing lots of online candidate assessments, automated AI or hybrid models are, I think, becoming the standard these days.

The Future of AI Proctoring in Online Candidate Assessments

The whole area of AI proctoring is definitely still changing and getting better. As AI tech advances and, well, unfortunately, people get smarter about trying to cheat, the future probably holds even more advanced and refined solutions aimed at making things both more secure and a better experience for the candidate.

More Sophisticated Behavioral Analytics: In the future, AI proctoring will likely go beyond just simple rules (like “didn’t see a face for 10 seconds”). It will probably start using more advanced ways to analyze behavior to spot subtle differences in how someone types, how they move their mouse, maybe even signs of increased thinking effort or even emotional states (though that last one brings up some pretty significant ethical questions). This could help catch cheating methods that aren’t so obvious right now.

Increased Integration with Adaptive Assessments: I expect to see tighter connections between AI proctoring and assessment platforms that adapt questions based on how you’re doing. The AI proctoring data could potentially even influence how difficult the test gets in real-time, or maybe affect how your score is interpreted based on anything unusual detected during the test.

Focus on Candidate Comfort and System Transparency: As the technology gets more mature, I think there will be a bigger push to make the process feel less intrusive and more understandable for candidates. Better algorithms should reduce false positives, and maybe better user interfaces will explain why something was flagged (“Hey, looked like another voice was detected”) rather than just saying “flagged.” That kind of thing helps build more trust.

Advanced Security Against Emerging Threats: As new ways to cheat pop up, the security measures will have to evolve too. Future AI proctoring systems will need to be able to spot increasingly complex methods to get around monitoring, like using multiple monitors, virtual machines, or fancy communication devices. This means ongoing research and development is necessary.

Predictive Analytics for Identifying Potential Risks: AI could potentially look at some information before the assessment (like maybe how the setup environment looks, or aggregated, anonymous data about candidate demographics) along with early behavior during the test to try and predict which sessions might have a higher chance of misconduct. This could help human reviewers focus their time more effectively.

Accessibility Improvements and Wider Device Compatibility: Future systems will definitely aim to work better across different operating systems, browsers, and types of devices (maybe even secure mobile environments eventually?). Efforts will also keep going to improve accessibility features for candidates with all sorts of different needs.

Frequently Asked Questions (FAQs)

Here are some common questions people often ask about AI proctoring in candidate assessments:

Q: Is AI proctoring always watching me?

A: AI proctoring systems monitor your video, audio, and screen activity during the assessment session. The AI automatically processes this data to find things that don’t match the assessment rules. A human reviewer might look at parts of the recording that were flagged later, but typically, they aren’t watching the whole session live.

Q: What kind of activities does AI proctoring flag as suspicious?

A: Things that commonly get flagged include looking away from the screen for too long, if it detects another person’s face or voice, opening applications you shouldn’t be using, using a mobile phone, or maybe a lot of noise in the background. The exact rules are set by whoever is giving the test.

Q: How does AI proctoring handle privacy?

A: Reputable AI proctoring providers follow data privacy regulations. They collect only the data they need for proctoring, store it securely, and usually get rid of it after a set amount of time. Organizations using proctoring are supposed to inform candidates about the data collection and get their permission.

Q: Can AI proctoring tell if I’m thinking or just looking away?

A: AI algorithms learn from patterns, but yes, they can sometimes make mistakes and flag innocent behavior – that’s what we call a false positive. Briefly looking away to think is usually different from looking away for extended periods or in patterns that suggest cheating. Having a human review flagged sessions is pretty standard practice to tell the difference between accidental behavior and actual misconduct.

Q: What happens if the AI flags me incorrectly (false positive)?

A: Most systems include a process where a human reviews all the flagged sessions. A trained reviewer looks at the recording and the data around the flag to decide if any rules were actually broken. Candidates usually have a way to appeal a violation if they believe it was flagged incorrectly.

Q: Do I need special equipment for AI proctoring?

A: You typically need a computer or laptop that has a working webcam and microphone, plus a stable internet connection. The exact requirements can be a bit different depending on the platform and the specific assessment, so it’s a good idea to check the technical requirements they provide before you start the test.

Conclusion: AI Proctoring – The Standard for Reliable Online Candidate Assessments

As online candidate assessments really become the standard way of doing things, ensuring they’re honest and fair is, honestly, more important than ever. Fairness and security aren’t separate ideas here; they’re really two sides of the same coin, both completely essential for getting assessment results you can trust. AI proctoring has kind of emerged as this powerful, and frankly, necessary tool to uphold these principles in our digital world.

By using smart computer vision, audio analysis, and machine learning, AI proctoring offers monitoring that’s scalable, consistent, and based on data. It really helps create a level playing field for candidates who are honest by making it harder for people to cheat and spotting attempts when they happen. It improves security by checking identity, stopping access to information you shouldn’t have, and keeping really good records. While there are definitely challenges, like privacy worries and potential bias, that need careful attention and following best practices, the technology itself is just constantly getting better.

Implementing AI proctoring thoughtfully, with clear communication and robust processes for human review, is key to making it successful and accepted by candidates. It significantly improves how much you can rely on online assessments, making them a dependable basis for those really important hiring decisions. AI proctoring isn’t just about security, I think; it’s a fundamental part of building trust and integrity in the digital hiring process, which ultimately benefits everyone, both the companies and the candidates. It’s increasingly becoming, well, pretty much the standard for doing reliable online candidate assessments in modern recruitment.

Ready to see how advanced AI proctoring could make your online candidate assessments fairer and more secure? You can explore how HireOquick uses AI technology, including AI Proctoring, offers seamless integrations, and even helps create assessments with AI, all designed to help streamline your hiring process. Explore HireOquick’s Candidate Assessment Solutions.